How To Run An Epic Game Day ~ Chaos Engineering 101

Just how prepared is your team for a crippling DDoS attack? Do you know or are you guessing? We recently ran a game day and the results were amazing. Let me share our journey!

Just how prepared is your team for a crippling DDoS attack? How about a DNS misconfiguration? What about CDN failure? Do you know or are you guessing?

My current employer builds and maintains business-critical software. We're talking very critical. If you haven't heard it before, here's advice handed down through the generations: You don't mess with someone's pay. One of our products deals directly with pay. For that reason, even five minutes of downtime is a major headache for everyone on the team, and everyone that supports the product outside of tech.

We've gone through real incidents, prepped on future ones, and talked about failover, but never have we run a game day. The idea had been floating around in my head for a while, with the simple goal of understanding our team's response times, debugging muscles, and communication skills. We recently ran one, and the results were amazing. Let me share with you our journey!

A game day simulates a failure or event to test systems, processes, and team responses. The purpose is to actually perform the actions the team would perform as if an exceptional event happened. These should be conducted regularly so that your team builds "muscle memory" on how to respond. Your game days should cover the areas of operations, security, reliability, performance, and cost.

(No date) Game Day - AWS well-architected framework. Available at: https://wa.aws.amazon.com/wellarchitected/2020-07-02T19-33-23/wat.concept.gameday.en.html (Accessed: 23 February 2024).

Naturally, I turned to see what others had done, but couldn't find anything comprehensive. There are a plethora of articles, but none of them had a framework of sorts. Sure, there were many with details on what you should do, and things to monitor, but none of them had a proper doc to follow or ways to grow the team. Some were way too formal for a small team. So I built something myself.

Goals

At first, the goal was simple, understanding our team's response times, debugging muscles, and communication skills. As an engineering leader, this wasn't necessarily all that I wanted to accomplish. Subjecting your team to stress, even artificial stress can be a lot. If I were to do so, I wanted the team to grow from it. Here are the things I wanted to focus on:

- Measure response times

- Measure the accuracy of self-reporting

- See when the on-call person will naturally escalate the issue

- Test our monitors & synthetics

- Find system weaknesses

- Develop the team's:

- Muscle memory for debugging issues

- Confidence in systems they aren't in all the time

- Communication in high-stress situations

Stages

The process was fairly simple to plan, run, and review a game day.

- Planning

- At least a month for you to plan, warn, and book time for the team to have a game day.

- Block the team's calendar and warn the organization of the upcoming game day that will knock out the team's availability.

- Develop your chaos.

- I tried to focus on issues that could be solved in 10-15 minutes once they had been identified.

- I only put out chaos that could be caught by our synthetics system (an in-house puppeteer orchestrator).

- I only targeted one staging environment to eliminate the need to context switch or gain new credentials.

- Game Day

- A solid 5-6 hour block to run your chaos engineering inside.

- Order food if you're in the office. Seriously, it's the least you can do for putting this much stress on the team.

- Leave time both before and after the block for the team to plan and reflect, respectively.

- Review

- An hour-long call to go over what happened, review the accuracy of the people involved, and talk through proposed solutions.

Documentation

I'm a big fan of docs and a bigger fan of Notion. I made four notion documents during the process of planning, all of which were hidden from the team until appropriate times:

- An intro doc [Free Notion Template]

- A document that you can send out to go over what a game day is when you send out the calendar invite.

- Also vets game day as valuable in the team's eyes and lets them know there is free lunch (a big plus).

- Released to the team with the calendar invite.

- The actual game day document [Free Notion Template]

- This document introduces a story of sorts, goes over any questions they may have, and sets up a set of rules to follow.

- Contains an incident logbook and a faux on-call schedule for them to fill out.

- Released to the team the afternoon before game day.

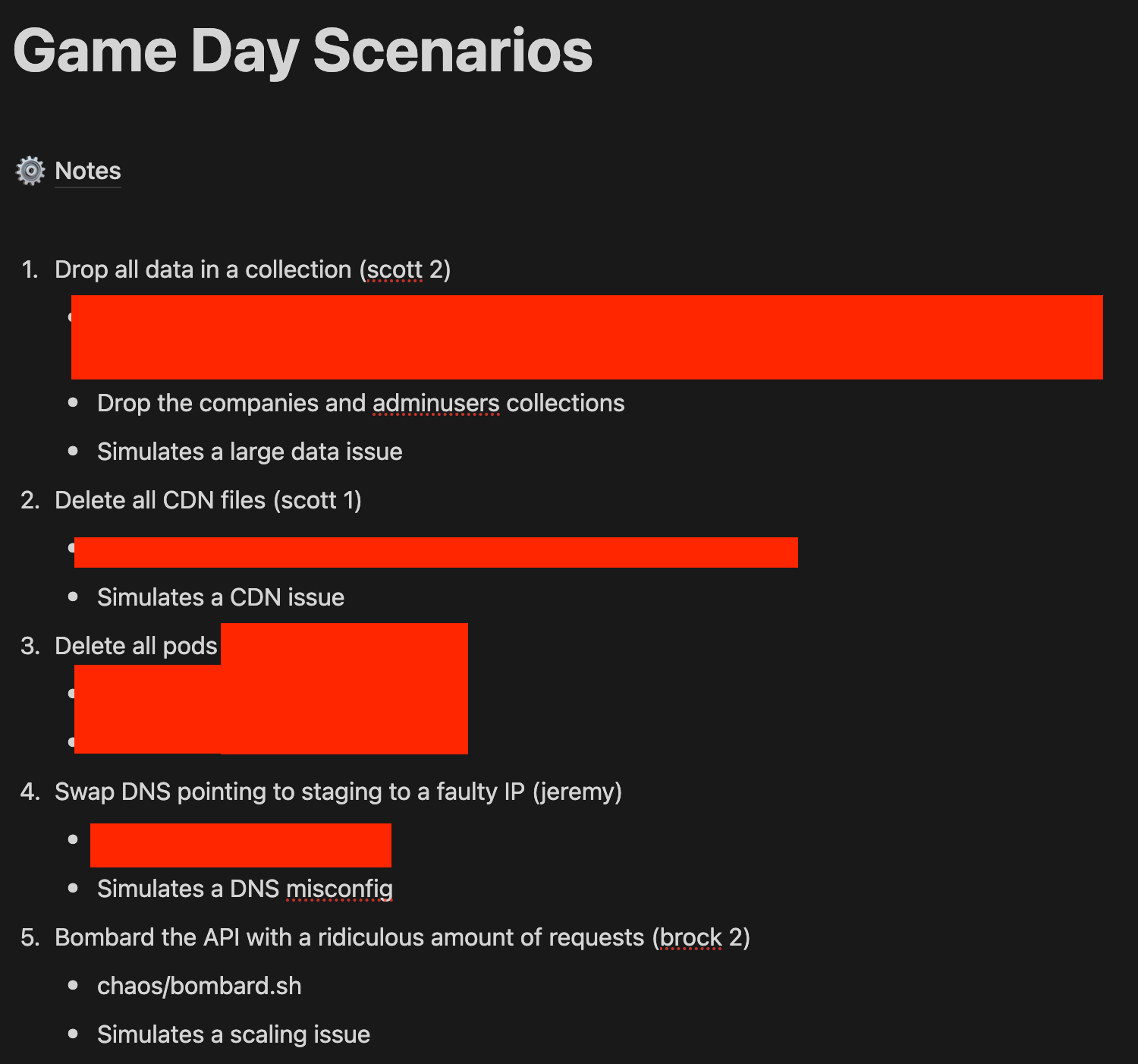

- The game-day playbook

- A document for the runner of game day to actually run the scenarios. These should be pre-baked scenarios with forecasted outcomes, and preassigned people.

- This document also serves as a place for you to scribe during the events while you await outcomes.

- Reviewed with the team after game day.

- The review doc

- A doc to cover the actual incident start/end times

- Note any wins you saw and any losses.

- Reviewed with the team after game day.

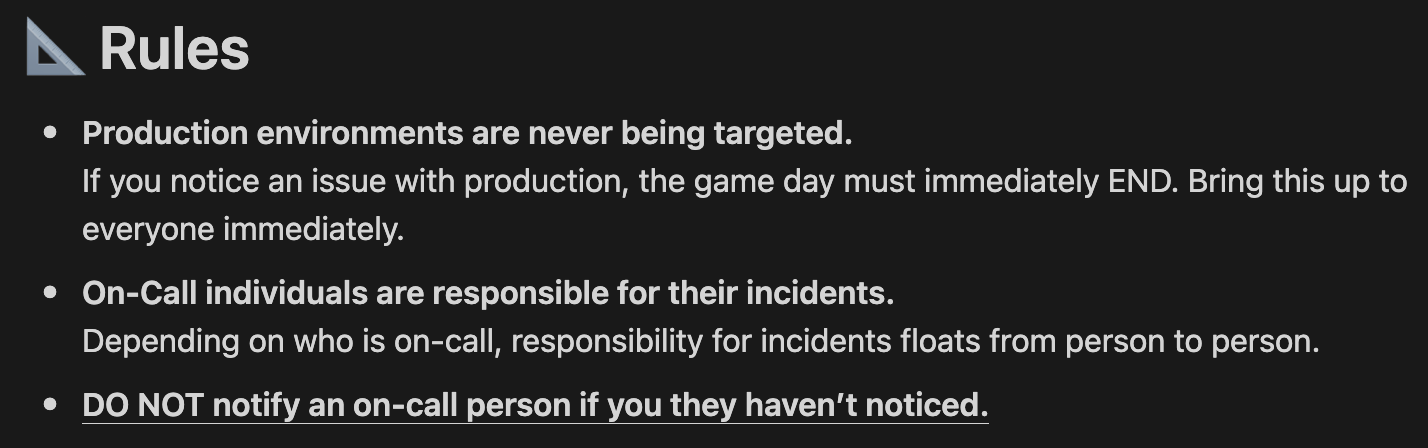

Rules are important

Anyone who has worked with me or for me knows that I'm very expectation-driven. I like giving my team and engineers expectations that they can drive decision-making in their own realms. For game day, I established three rules [expectations].

- Production environments are never targeted

This is important to point out to your team and makes sure that they know production is never an intended target. - On-Call people are responsible for incidents on their time

This immediately fleshes out the importance of the on-call schedule, and how they will handle their issue, simulating the "I'm the only one seeing this right now" behavior. This also makes sure that I'm able to hold the team members accountable during review. - Don't notify an on-call person

Again this harps on the "I'm the only one seeing this right now" behavior, because this is something that I wanted to test. It further illustrates the importance of being on-call.

I also made sure to time-box everything.

Our Outcome

As quoted from our review doc:

Goods

- The on-call schedule was established early, and logical grouping was formed so that shift transitions weren’t a big problem

- Pretty accurate descriptions

- Ownership was great

Bads

- Escalation improved throughout the day, however, the longer it took to escalate the issue, the longer the issue went on.

- People interrupted despite my plea

- No one nailed a specific time

I pointed out several things I noticed both bad and good, and the team talked it through. In general, I was noticing that the longer they took to escalate an issue to their peers, the longer the site was down [1.5 hours in one instance]. This was something they all wanted to improve on after review. That said, I was pleased with the ownership they took on their incidents. Even our least experienced engineer was able to captain an incident like a pro. That's a great outcome.

Another thing I noted was that people interrupted, despite being given ample warning about the team's unavailability. I made it a point to have our departments route all dev communication through me on the day so that I could triage where appropriate. There was one person, though, who ignored that and messaged someone on the team multiple times about an issue they were having. It was fairly low-priority. In the end, I think it was a test of the engineer's ability to say no and triage, however, heed the warning that people don't always respect your team's time.

The last bad thing noted to the team was inaccurate incident recollection. There were a few tools to aid them, but I think in the heat of the day they prioritized being hyper-vigilant over being retrospective. I walked them through the tools they could have used in a post-mortem, and we went on.

Would I Do It Again?

Absolutely. As a team, we learned a bunch about how we react to a variety of issues, and I watched their collaboration, ownership, and abilities grow throughout the day. It was an absolute win for the company in all regards, and we're more resilient because of that.

Not only is Game Day a great time to test your team and system resiliency, but it's also a time to see members of your team grow, gain confidence, and practice for situations that will arise in the future.

I hope this gives you enough information to go off and do your own game day. Check out the Notion templates above, make a copy, and form it to fit your organization.

Hey friend! Thank you for reading. I hope this article gave you some insight.

And a super special thanks to my subscribers who buy me coffee!